Photo by Kevin Ku on Unsplash

Objective of the Project

Speech recognition technology allows for hands-free control of smartphones, speakers, and even vehicles in a wide variety of languages. The World Food Program wants to deploy an intelligent form that collects nutritional information of food bought and sold at markets in two different countries in Africa: Ethiopia and Kenya. The objective of the project is to deliver speech-to-text technology for Amharic language. We used a deep learning algorithm to build a model that is capable of transcribing a speech to text.

Tools Used in the Project

The following tools were used in this project.

MLflow

This is a framework that plays an essential role in any end-to-end machine learning lifecycle. It helps to track your ML experiments, including tracking your models, model parameters, datasets, and hyperparameters and reproducing them when needed. The benefits of MLflow are:

It is easy to set up a model tracking mechanism. It offers very intuitive APIs for serving. It provides data collection, data preparation, model training, and taking the model to production.

Data Version Control, or DVC

This is a data and ML experiment management tool that takes advantage of the existing engineering toolset that we are familiar with (Git, CI/CD, etc.).

Along with data versioning, DVC also allows model and pipeline tracking. With DVC, you don’t need to rebuild previous models or data modeling techniques to achieve the same past state of results. Along with data versioning, DVC also allows model and pipeline tracking.

Continuous Machine Learning (CML)

This is a set of tools and practices that brings widely used CI/CD processes to a machine learning workflow.

Tensorflow

This is an open-sourced end-to-end platform, a library for multiple machine learning tasks. It provides a collection of workflows to develop and train models using Python or JavaScript, and to easily deploy in the cloud, on-prem, in the browser, or on-device no matter what language you use.

Keras

This is a high-level, deep learning API developed by Google for implementing neural networks. It is written in Python and is used to make the implementation of neural networks easy. It also supports multiple backend neural network computation. It runs on top of Tensorflow.

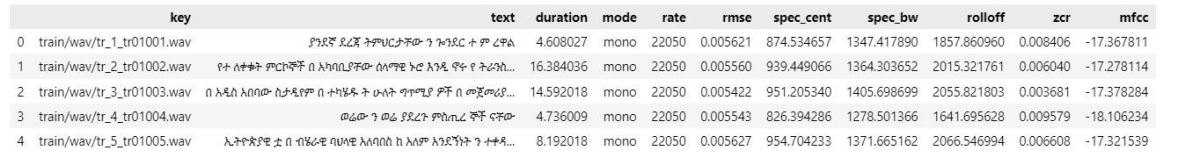

Data

For this project we have used Amharic audio data with transcription, which was prepared by Getalp. They prepared over 10,000 audio clips totaling over 20 hours of speech. Each clip is a few seconds long and comes with its transcription. There are a few hundred separate clips for test purposes as well.

Preparing Training Data

The training data for this project include:

The features (X) are the audio file paths The target labels (y) are the transcriptions

Data Preprocessing

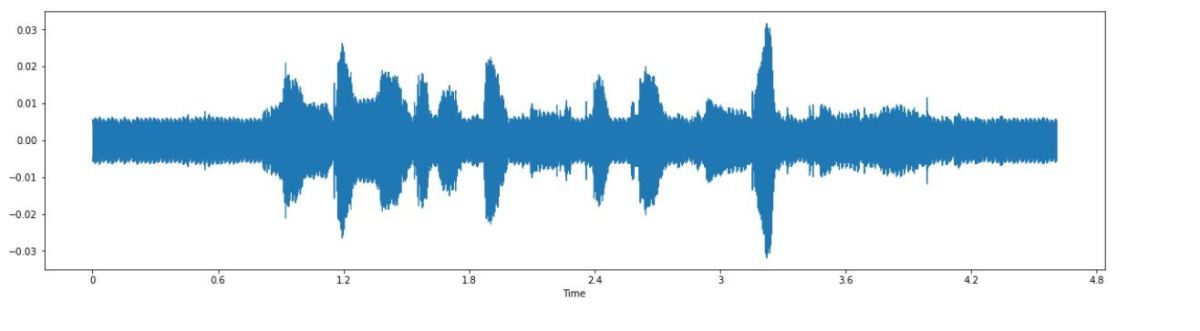

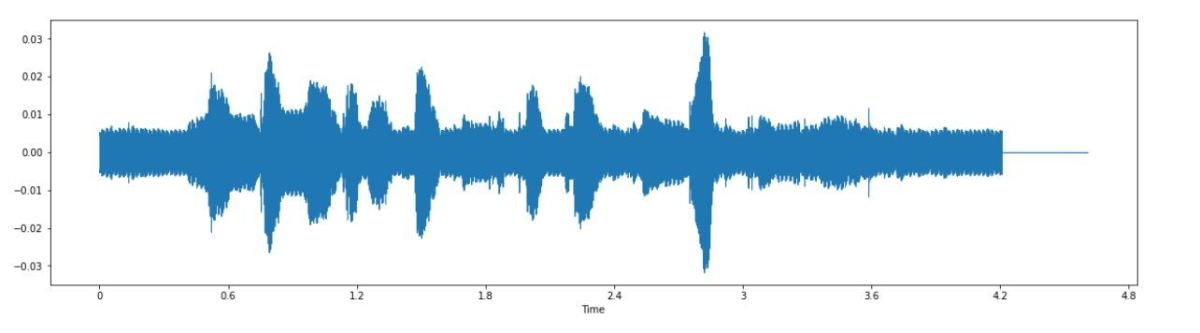

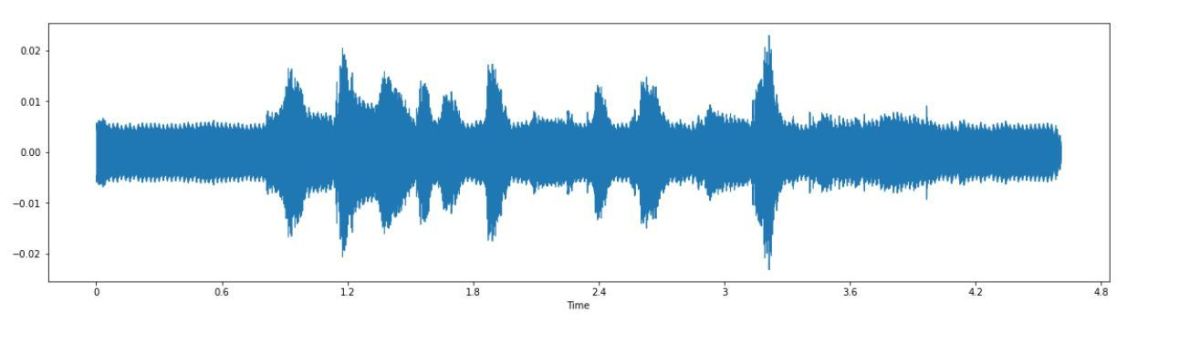

Data Loading: Load audio files using labrosa Python module and get the sample array and sample rate of the audios. Standardize sampling rate: The audio sample rate by default for each audio was 16kHZ. Audio Resizing: All audio files needed to have the same length in order to get a better result with the modeling. So they have resized to nearly four seconds. Convert to channels: All audio files have been converted into stereo channels because every audio file has to have similar channels. Data Augmentation: To increase the amount of data by adding slightly modified data from existing data. It acts as a regularizer and helps reduce overfitting when training a machine learning model. I have showed different augmentation techniques with the below results. Feature Extraction: In this preprocessing step, we created metadata that includes audio features to be used later in the preprocessing pipeline. Metadata’s columns include path to the file, duration of the audio, text (translation) and channel.

Data Augmentation on a Selected Audio Sample

Deep Learning Architectures

There are many variations of deep learning architecture for ASR. Two commonly used approaches are: For this project we have chosen to use the CNN-RNN based architecture.

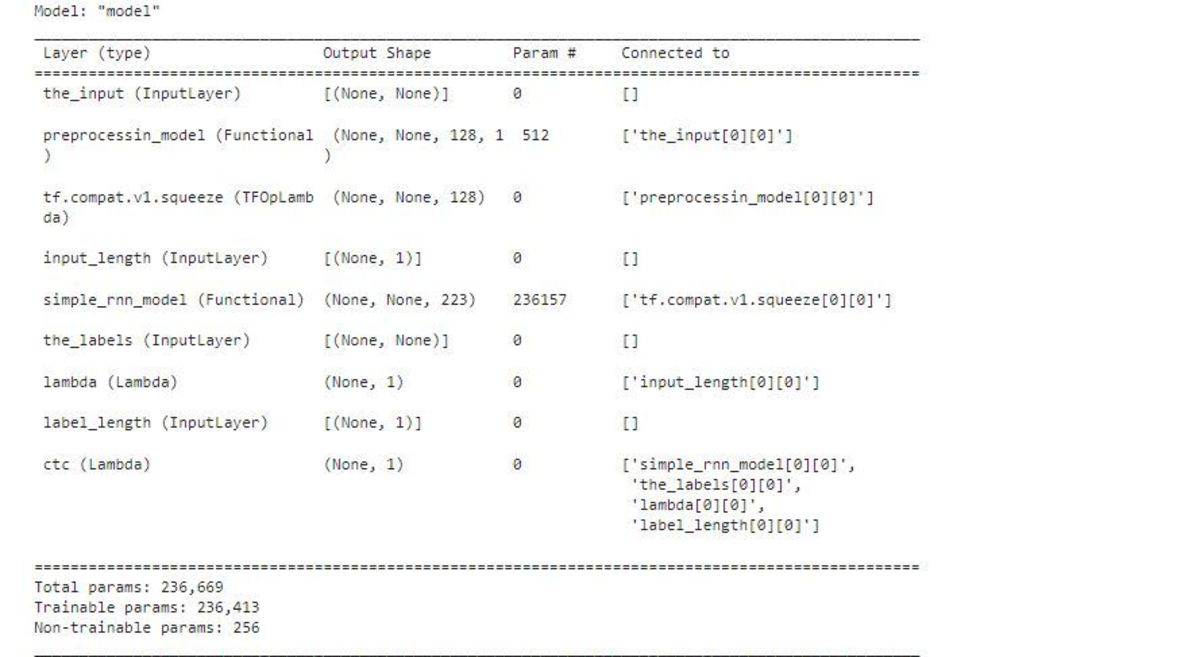

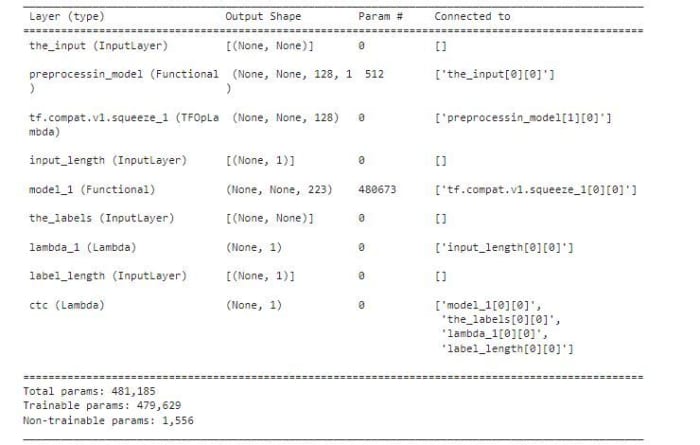

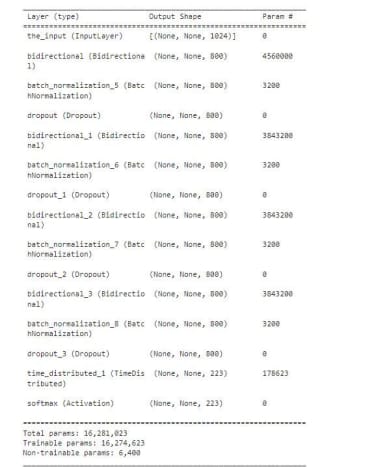

Deep Learning Models and Results

Simple RNN Model: Recurrent neural networks are the most preferred and utilized deep learning architecture due to their ability to model sequential data, including speech recognition. The first model I tried had one RNN layer with Gated Recurrent Unit (GRU), a simplified type of Long-Short Term Memory Recurrent Neuron with fewer parameters than typical LSTM. Finally, there’s a softmax layer to output letter probabilities. CNN with RNN: Convolutional Neural Networks (CNN) are type of deep learning architecture that is specialized to be mostly used in analyzing visual datasets. They will uses the spectrogram of speech signals that are represented as an image and recognize speech based on these features. We used two combination of CNN (one dimensional layer) and RNN (simple RNN and using bidirectional LSTM layer).

Speech Recognition using simple RNN algorithm

Conclusion and Future Work

Throughout the project, a lot of deep learning algorithms have been discussed and as we can see from the result, the more we fine-tuned the parameters of the algorithms we used, the better the results have become. Amharic Language speech recognition is not like many matured speech recognition systems available, and still needs more training data as others have more than 100k hours of data compared to the data we used (20 hrs). Also using language modeling will improve the Amharic sentence prediction which can actually help with better speech recognition.

GitHub Link to the Project

Amharic Speech to textAfrican language Speech Recognition - Speech-to-Text - GitHub - tesfayealex/Speech-to-Text: African language Speech Recognition - Speech-to-Text

This content is accurate and true to the best of the author’s knowledge and is not meant to substitute for formal and individualized advice from a qualified professional.